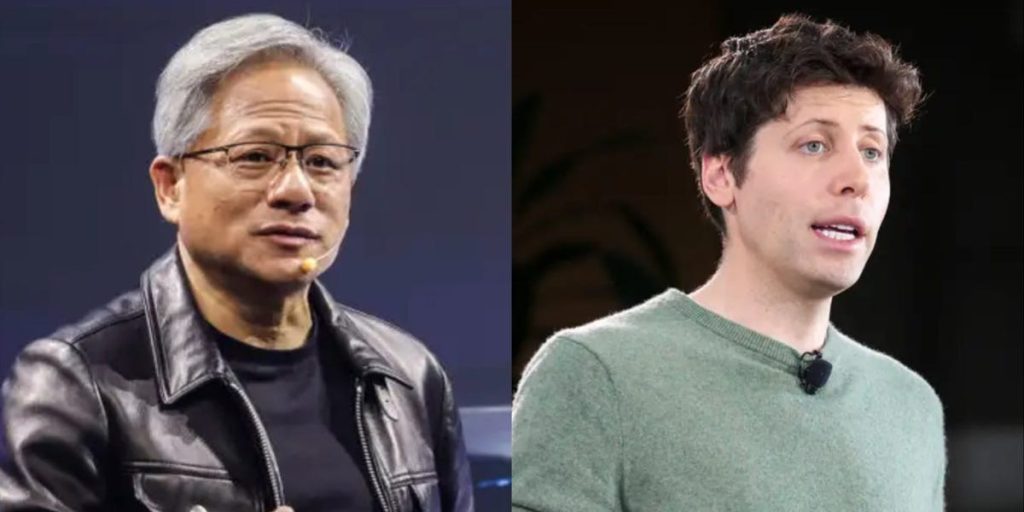

Two of the biggest names in AI are teaming up.

Nvidia and OpenAI announced on Monday that they are becoming strategic partners, and the chipmaker says it will invest up to $100 billion into the AI company to help OpenAI build out AI data centers.

OpenAI plans to construct “at least 10 gigawatts” of AI data centers running Nvidia systems, a massive amount of computing power that the AI company will use to train and run its AI models, like those powering ChatGPT.

“Nvidia and OpenAI have pushed each other for a decade, from the first DGX supercomputer to the breakthrough of ChatGPT,” Huang said in a statement. “This investment and infrastructure partnership mark the next leap forward—deploying 10 gigawatts to power the next era of intelligence.”

Nvidia’s stock price shot up 4% after the announcement.

10 gigawatts is the equivalent of roughly 4 million or 5 million GPUs, Nvidia CEO Jensen Huang told CNBC in an interview. For comparison, Nvidia expects to ship that in total GPUs this year.

According to the companies, the first phase of the partnership is “targeted to come online in the second half of 2026 using Nvidia’s Vera Rubin platform.” Nvidia’s Rubin platform has a new CPU and networking architecture.

“Everything starts with compute,” said OpenAI CEO Sam Altman, who has looked to raise billions in the last year to fund the development of the company’s AI frontier models, such as GPT-5.

The company is in the process of converting to a for-profit company, a move that requires regulatory approval and contract negotiations with Microsoft, its biggest investor. The change is expected to make it easier for OpenAI to attract the billions in investment needed to stay competitive in the AI race.

“Compute infrastructure will be the basis for the economy of the future, and we will utilize what we’re building with Nvidia to both create new AI breakthroughs and empower people and businesses with them at scale,” Altman said in a statement.

Related stories

The great AI infrastructure build-out in the US

Nathan Howard/Getty Images

The announcement comes amid a spending spree among Big Tech and leading AI companies on AI infrastructure, a race so massive that some economists say it is moving the needle on the entire US economy. Microsoft, an early OpenAI investor, Meta, and Alphabet all expect to spend billions on capex in the coming year.

Meta CEO Mark Zuckerberg said the bigger risk is to move too slowly.

“If we end up misspending a couple of hundred billion dollars, I think that that is going to be very unfortunate, obviously,” Zuckerberg said during a recent appearance on the “Access” podcast. “But what I’d say is I actually think the risk is higher on the other side.”

“The risk, at least for a company like Meta, is probably in not being aggressive enough rather than being somewhat too aggressive,” Zuckerberg added.

Tech companies are also racing to strike energy deals to power their massive data centers. Amazon, Google, and Microsoft have all announced deals with nuclear energy providers.

The great AI data center build-out in the US has proven to be controversial within many of the communities where the data centers are located, often drawing pushback from locals and environmental groups and straining energy grids.

OpenAI and other Big Tech companies already spend huge amounts on Nvidia’s chips to train and run their AI systems. In its second-quarter earnings, Nvidia reported that just two unnamed customers — widely believed to be one of the major Big Tech companies — make up 39% of its revenue.